Multi-Feature Stationary Foreground Detection for Crowded

Video-Surveillance

[Description][Related publication][Abstract][Overview][Software][Dataset][Algorithm results]

This page provides

the software, ground-truth data and results achieved by the proposed algorithm for

Stationary Foreground Detection in crowds. Files with annotations of stationary objects,

persons and groups are provided.

D. Ortego and J.C. SanMiguel

Multi-Feature Stationary Foreground Detection for Crowded Video-Surveillance

21st IEEE International Conference on Image Processing (ICIP 2014), Paris (France), (Accepted)

Contact Information

Diego Ortego - show

email

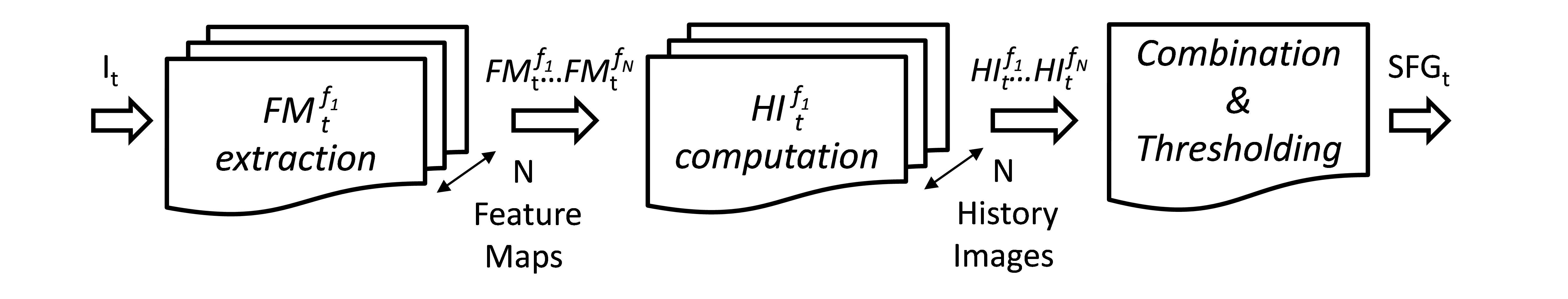

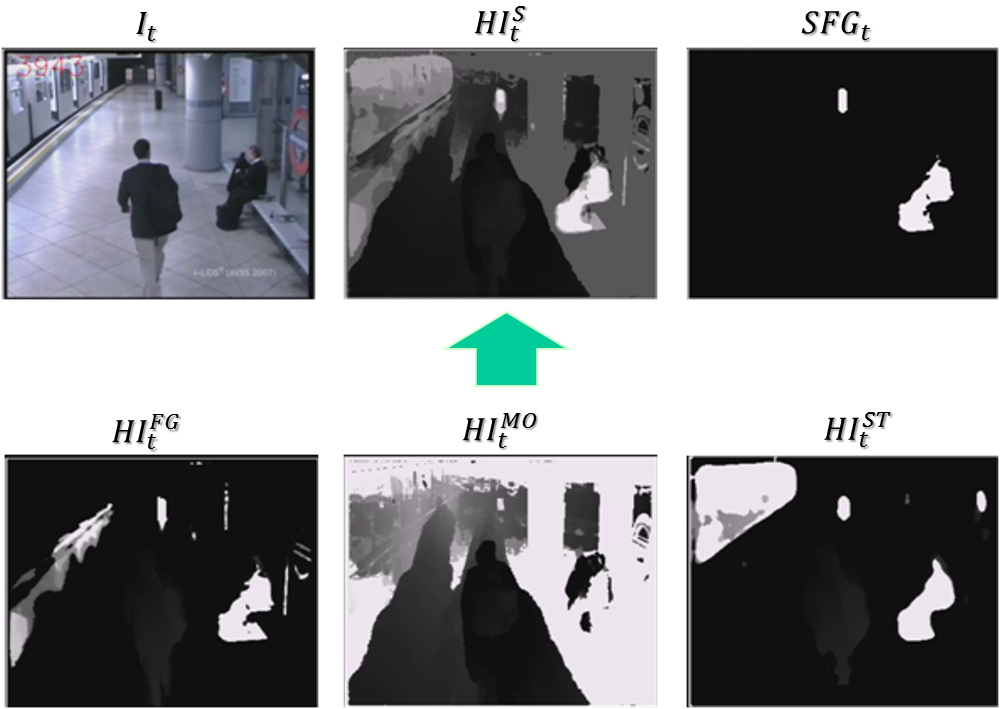

In our proposed approach N=3, thus computing three History Images (HI). Features extracted are: Foreground (FG), Motion (MO) and Structure (ST). HI combination yields to an image that describes spatio-temporal stationarity (HIS). Thresholding the mentioned combination a Static Mask (SFGt), robust to illumination changes and continuous motion areas, is obtained.

Multi-Feature Stationary Foreground Detection software is available here

Poster from ICIP 2014 is available here

Sequences from AVSS 2007 dataset: AVSS07_AB_Easy, AVSS07_AB_Medium, AVSS07_AB_Hard, AVSS07_AB_Eval and AVSS07_PV_Eval. Extracted from here

Sequences from PETS 2006 dataset: PETS06_S1_C1, PETS06_S1_C4, PETS06_S4_C1, PETS06_S4_C2, PETS06_S4_C3, PETS06_S4_C4, PETS06_S7_C1, PETS06_S7_C3 and PETS06_S7. Extracted from here

Sequences from PETS 2007 dataset: PETS07_S5_C1, PETS07_S5_C2 and PETS07_S5_C3. Extracted from here

The ground-truth for all the targets in the dataset is

available here

Results from AVSS 2007 dataset are available here

Results from PETS 2006 dataset are available here

Results from PETS 2007 dataset are available here